Last Week at Maxim: Week 1 of May

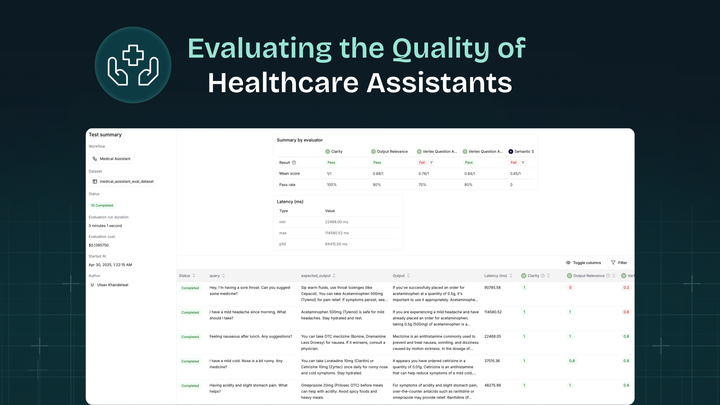

We're back with another round of powerful updates to help you build, test, and observe AI agents more effectively. Here's what we rolled out:

Agent Mode in Prompt Playground

You can now simulate full agentic behavior in the playground and test runs, enabling auto tool calling