Mastering the Art of Prompt Engineering: A Practical Guide for Better AI Outcomes

Prompt engineering might just be one of the most accessible yet impactful skills in artificial intelligence today. At its core, prompt engineering involves crafting specific inputs that guide Large Language Models (LLMs) to produce precise and accurate outputs, essential for ensuring AI quality. You don't have to be a machine learning expert to create effective prompts, but perfecting them requires careful thought and practice.

This guide walks you through key insights and techniques to help you become proficient in prompt engineering using the whitepaper published by Google.

Why Prompt Engineering Matters

With generative AI models rapidly growing in capabilities, the difference between average and excellent results often boils down to how well you phrase your prompts. Well-designed prompts ensure your model understands precisely what you're looking for, minimizing ambiguity and significantly boosting the accuracy and quality of responses.

Behind the Scenes: How Prompt Engineering Works

To understand prompt engineering, think of an LLM as an incredibly skilled conversationalist. It uses patterns learned from massive datasets to predict the next word in a sentence. The more effectively your prompt sets up the "context," the easier it becomes for the model to produce coherent and targeted responses.

Prompt engineering includes tuning key model parameters such as:

- Temperature: Controls randomness. Lower temperature leads to predictable, focused answers, while higher temperature allows creative, diverse responses.

- Top-K & Top-P sampling: Limits the model’s choices to the most probable tokens, balancing creativity and accuracy.

Here's a quick configuration cheat-sheet:

- For accuracy-focused tasks:

Temperature = 0.1, Top-P = 0.9, Top-K = 20 - For creative tasks:

Temperature = 0.9, Top-P = 0.99, Top-K = 40

Powerful Prompting Techniques Explained

Zero-shot, One-shot, Few-shot

- Zero-shot prompting simply gives a direct instruction without examples.

'''

Classify the sentiment of the following sentence as positive, negative, or neutral:

Sentence: "I can't believe how slow the service was at the restaurant."

Sentiment:

'''

Fig 1. Prompting without providing any examples

- One-shot prompting provides a single example, guiding the model by analogy.

'''

Classify the sentiment of the following sentences as positive, negative, or neutral.

Example:

Sentence: "The staff was incredibly helpful and friendly."

Sentiment: Positive

Now classify this sentence:

Sentence: "I can't believe how slow the service was at the restaurant."

Sentiment:

'''

Fig 2. Prompting by providing the model a single example of the task

- Few-shot prompting provides multiple examples, solidifying patterns for the model to follow.

'''

Classify the sentiment of the following sentences as positive, negative, or neutral.

Examples:

Sentence: "The staff was incredibly helpful and friendly."

Sentiment: Positive

Sentence: "The food was okay, nothing special."

Sentiment: Neutral

Sentence: "My order was wrong and the waiter was rude."

Sentiment: Negative

Now classify this sentence:

Sentence: "I can't believe how slow the service was at the restaurant."

Sentiment:

'''

Fig 3. Prompting by providing the model with a few examples of the task

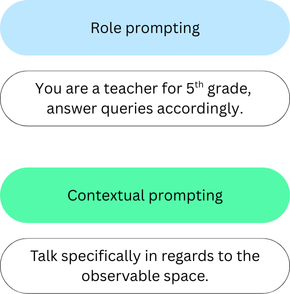

Role, System, and Contextual Prompting

- Role prompting assigns an identity to the AI, such as a teacher or travel guide.

- System prompting instructs the model explicitly on the desired format or structure of responses.

- Contextual prompting embeds background information, helping the model tailor its response specifically to the current scenario.

Step-back Prompting and Chain of Thought (CoT)

- Step-back prompting is a method designed to improve an AI model’s understanding of complex problems by guiding it to first think broadly before diving into details. Instead of immediately tackling a specific task, the model first answers a related, general question, which sets context and activates relevant background knowledge.

- Chain of Thought prompting explicitly encourages the AI to explain its reasoning step-by-step, rather than rushing to a conclusion. CoT dramatically improves performance on complex problems particularly those requiring logic, math, or sequential reasoning.

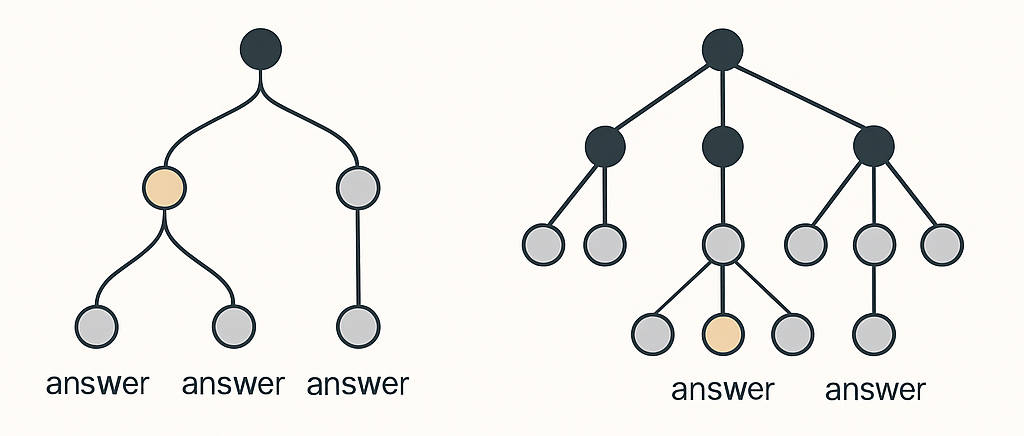

Self-consistency & Tree of Thoughts (ToT)

- Self-consistency involves generating multiple reasoning paths, then selecting the most common answer.

- Tree of Thoughts (ToT) extends CoT by allowing exploration of multiple reasoning paths simultaneously, ideal for complex tasks.

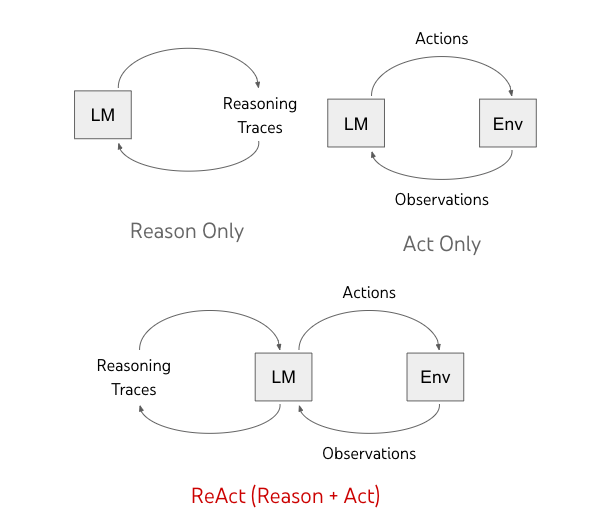

ReAct (Reason and Act)

The ReAct model combines internal reasoning with external actions like searching or using external APIs to handle more intricate tasks efficiently.

Key Results and Why They Matter

These techniques drastically improve performance across various applications:

- Increased accuracy: Techniques like CoT and self-consistency significantly improve task performance, especially in reasoning-intensive scenarios.

- Reduced ambiguity: Structured prompts and JSON schemas sharply reduce the likelihood of vague or irrelevant responses.

- Scalability: Automatic Prompt Engineering (APE) allows for prompt optimization at scale, generating and evaluating prompt variants systematically. It looks like the following:

- Generate prompt variants: APE tools can automatically create multiple versions of a prompt with slight changes (e.g., wording, structure, tone).

- Evaluate automatically: These variants are then tested on a task (like answering questions or summarizing text), and their performance is measured using some predefined metric (like accuracy, relevance, etc.).

- Choose the best: The system identifies the best-performing prompt(s) without human trial-and-error.

Limitations and Future Challenges

Prompt engineering isn't without challenges. Models still struggle with very complex reasoning, math accuracy, and managing lengthy outputs. Additionally, JSON and structured prompts, though useful, can become costly due to increased token usage. Tools like JSON repair and schema validation are helpful, but add complexity.

Future research should tackle these issues directly, streamlining structured prompting and enhancing reasoning capabilities further.

Best Practices at a Glance

- Keep it simple: Concise prompts perform better.

- Be explicit about output format: Clearly instruct desired outcomes.

- Use structured outputs: JSON formatting improves reliability.

- Document iterations: Track all prompt variations systematically.

- Adapt to model updates: Regularly refine prompts with new model versions.

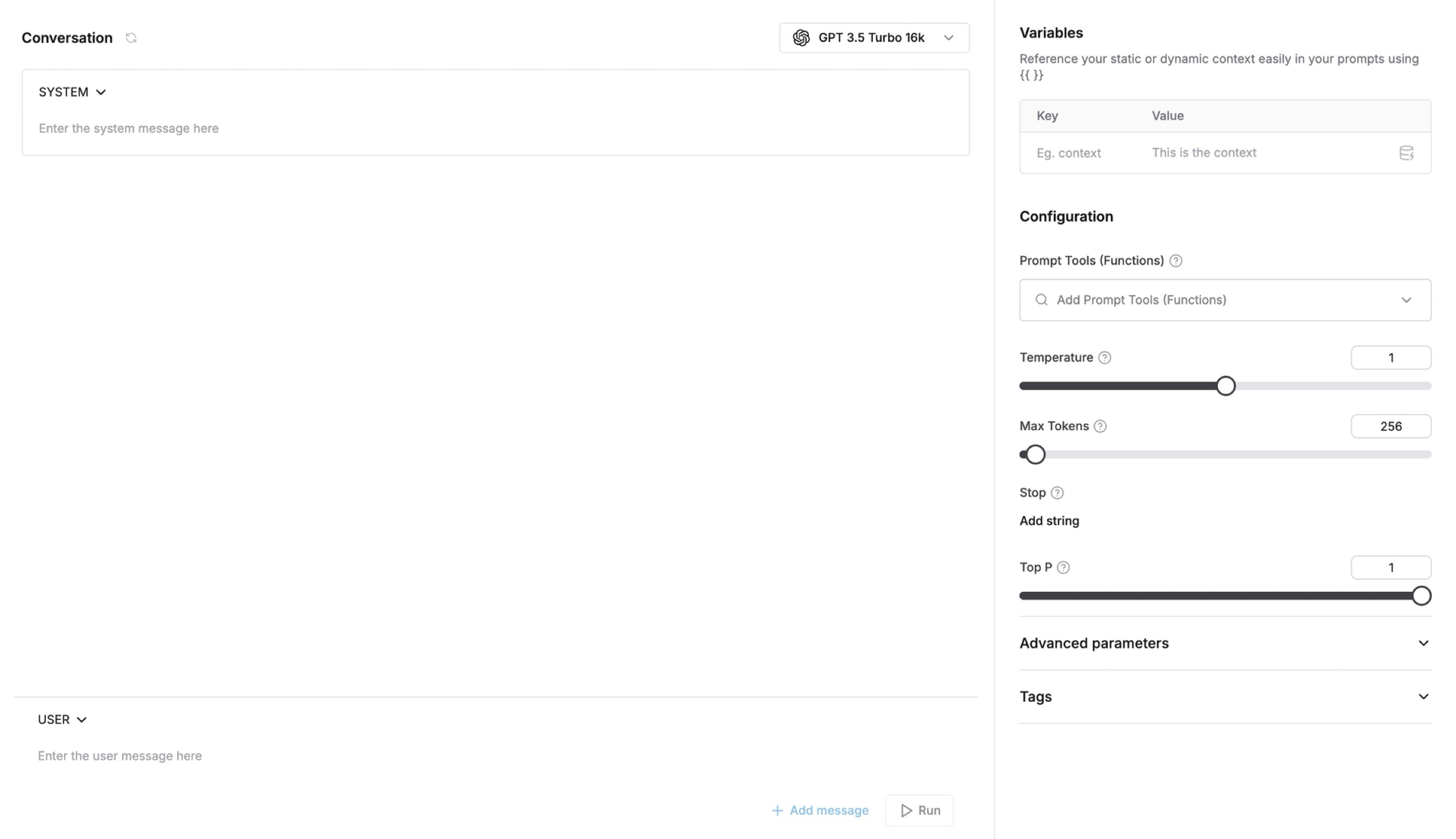

How to use Maxim AI in your Prompt Engineering Journey

Crafting the perfect prompt often involves extensive trial and error, something Maxim AI makes dramatically easier. Maxim AI is a dedicated platform for designing, evaluating, and optimizing prompts efficiently and systematically.

Here’s how Maxim AI transforms prompt engineering:

Interactive Prompt Playground

Experiment with your prompts in real-time, adjusting models, parameters, and input structures without any hassle. You can immediately see how changes influence the outputs, saving valuable development time.

Prompt Version Control

Keep track of various prompt iterations with built-in version management. This enables effortless collaboration, clear documentation, and quick comparisons of prompt performance over time.

Side-by-Side Prompt Comparison

Easily evaluate different prompt variations side-by-side, ensuring that your final choice consistently yields the most accurate and relevant responses.

Automated Evaluations

Use Maxim’s built-in or custom evaluation metrics to quantitatively measure prompt effectiveness, ensuring consistently high-quality AI performance across deployments.

Final Thoughts: The Power of Prompting

Effective prompt engineering unlocks the true potential of generative AI, turning promising technology into practical solutions. As models evolve, so too will the prompts we create, therefore making continuous learning and experimentation integral parts of prompt engineering.

Prompt engineering isn’t just about writing better instructions; it’s about thinking like an AI, structuring problems clearly, and anticipating possible responses. By mastering these skills, you'll position yourself at the forefront of AI-driven innovation.

Whether refining models in production, testing new AI capabilities, or simply exploring the power of generative AI, Maxim AI accelerates your prompt engineering journey, transforming great AI potential into real-world success.

Ready to take your prompt engineering to the next level? Explore Maxim AI today.

References

- Prompt Engineering: https://www.kaggle.com/whitepaper-prompt-engineering

- Prompt engineering on Maxim