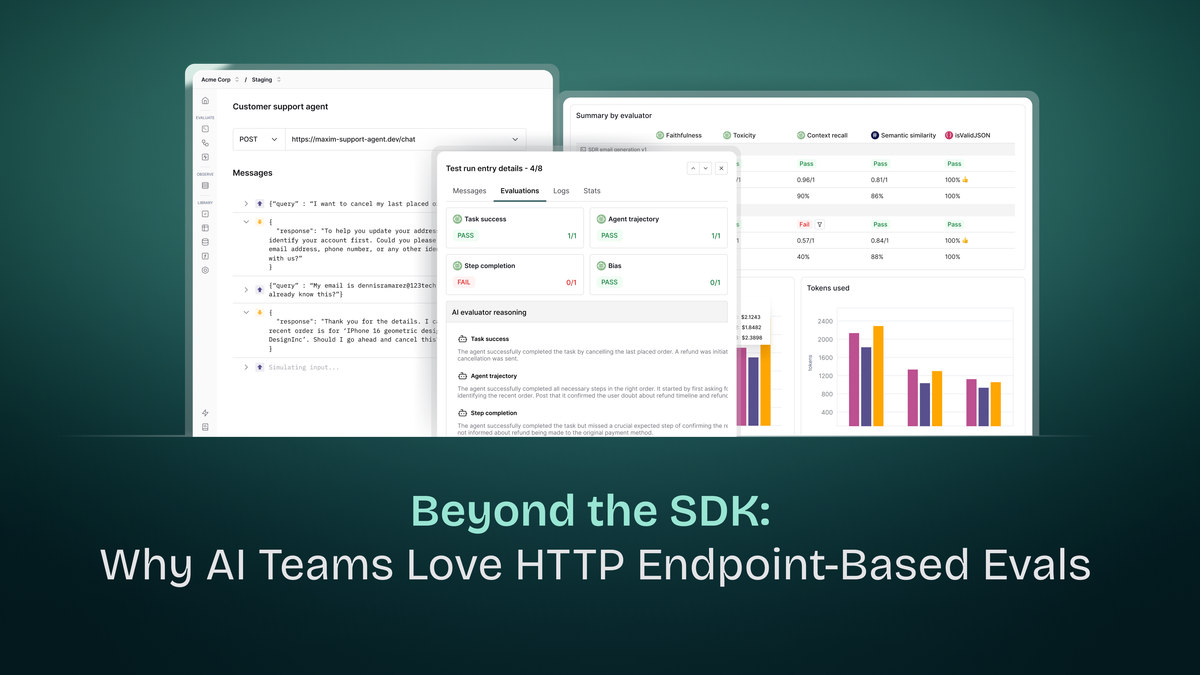

Beyond the SDK: Why AI Teams Love HTTP Endpoint-Based Evals

Since the beginning, HTTP Endpoint-Based Offline Evals have been a core feature of the Maxim platform and a favorite among our users.

While our SDKs allow engineers to integrate evaluations directly into their codebase, a purely code-based approach introduces friction, often limiting who can run them and how they are orchestrated. Running an eval often requires pulling a branch, setting up a local environment, and manually orchestrating scripts. It ties the execution of tests to the engineering environment, dropping teammates who are closest to user experience, often Product Managers and domain experts, out of the eval loop. We built the HTTP Endpoint capability to remove that friction allowing AI teams to seamlessly and rapidly deliver on their AI vision without constraints. Conceptually similar to "Postman for AI," it allows teams to connect their fully assembled, complex agents to Maxim via a standard API endpoint. This setup decouples the evaluation logic from the agent's source code. It transforms what used to be a complex orchestration task into a single-click action from the UI.

Productivity and Collaboration Gains

The primary utility of this feature is speed. It treats the AI Agent as a black box service, exposing only the nodes you wish to evaluate. Whether the agent is running on staging or prod environment, it can be evaluated directly via the Maxim UI.

This separation unlocks significant productivity gains for cross-functional teams. Product Managers and domain experts can run evaluations on new agent versions without needing to touch the codebase or wait on engineering resources. They simply bring the API endpoint on the platform once, and then every time they select the dataset, choose their quality metrics, and execute the run. This streamlines the feedback loop across the entire development lifecycle and allows teams to iterate faster.This capability is also a game-changer for regression testing and CI/CD. Because the evaluation logic is decoupled from the internal code, engineering teams can easily integrate Maxim evaluations into their pipelines using tools like GitHub Actions. A simple trigger can run your agent against a gold-standard dataset every time a PR is opened, ensuring that performance regressions are caught immediately without requiring complex test harness maintenance for every new agent version.

Solving State Management in Multi-Turn Simulations

One of the most complex aspects of offline evaluation is testing multi-turn conversations. Scripting a conversation flow where the output of one turn influences the input of the next is cumbersome to maintain in code.

The HTTP workflow simplifies this by allowing teams to run multi-turn simulations directly against the API. Maxim orchestrates the conversation, sending user inputs and capturing agent responses while maintaining the session context. We address the challenge of stateless APIs by generating a unique {{simulation_id}} for every session. This variable is injected into the request body or headers, allowing your agent to maintain memory and context across multiple turns without requiring complex client-side logic.For agents that require more explicit context, you have full control over the payload structure, allowing you to pass the entire conversation history via the request body. We also support pre- and post-request scripts, giving you the flexibility to manipulate data or handle complex session logic dynamically before and after every interaction.

Secure Staging with Vault and Environments

A common challenge with HTTP-based testing is handling authentication and environment-specific configurations securely. Teams often need to test agents sitting behind auth walls or switch between staging and production endpoints seamlessly.

We designed this feature to work natively with Maxim Vault and Environments. Teams can securely store API keys and auth tokens in the Vault, injecting them into the HTTP headers at runtime. This ensures that you can test secure, internal agents without exposing credentials or hardcoding sensitive data into your test configuration.

Standardizing Quality for a "Swarm of Agents"

This architectural pattern scales remarkably well for large organizations. A Global 500 utility company is currently leveraging this feature to manage quality across a massive internal initiative. They are building a "swarm of agents" with distinct agents developed by independent teams across the organization.

By standardizing on HTTP Endpoint-Based Evals, they have established a unified quality gateway. Regardless of how an individual team builds their agent, they expose it via a standard API schema. This allows the central platform team to run consistent evaluations across hundreds of users and distinct projects. It ensures that every agent in the swarm meets the organization's performance and safety standards before production release.

Whether you are a single developer testing a prototype or a global enterprise managing hundreds of agents, moving your evaluations to an HTTP-based workflow bridges the gap between code and quality. It empowers every member of your team - from engineering to product - to ensure your agents are reliable and ready for the real world.